Implementation

Dagger gives node implementations 30 seconds to respond per invocation, later responses are discarded. For long compute nodes this period is extended to 30 minutes (needs to be requested as a QoS requirement in sift.json).

Node input

The input object provided is a dictionary containing up to four fields:

{

"input": {

"in": {},

"query": [],

"with": {},

"get": []

}

}

| Field | Description |

|---|---|

in | A BucketedData object containing the left selected data. |

query | An array containing the key hierarchy for the current iteration that has been selected for the provided data set. e.g. If we want to group by the first hierarchy /* on a key that looks like this 20160304/abcdefg, the value of query in one of the iterations will look like this [ '20160304', '*']. |

with | A BucketedData object containing the right selected data if a selection was provided. |

get | An array containing the result of the get for a specific key across all the available buckets. |

Java, Scala and Clojure

For Java, Scala and Clojure the ComputeRequest object will be passed to the node instead.

{

"bucket": "bucket_name",

"data": []

}

[{

"bucket": "bucket_name",

"key": "key_name",

"data": [{

"key": "key_name",

"value": <bytes>

}]

}]

Data

Self evidently, this is the smallest quantum of data an input can source or sink and is also the smallest unit of work a node can operate on. Data contains:

"data": {

"key": "key1",

"value": [], // byte array

"epoch": 1457700316

}

| Field | Description |

|---|---|

key | A string that uniquely identifies this data element. Keys are a very important piece of the Dagger as operations can be performed on the key-space. For non-trivial applications, you design your key-space much like you design your code. Keys are limited to UTF-8 strings and may contain a hierarchy using the / character as a separator. In a hierarchy, selections can be made if the data is being selected from a store. Currently, a key can have a maximum hierarchy depth of 7. If two keys are identical, the system treats them as equivalent and any previous values with the same key are overwritten. As a function of the port, data elements may be repeated if they present a previously utilised key. Also depending on the port, you may be responsible for creating this key or it may be created for you based on known attributes from the data unit. |

value | An opaque value associated with this key. The platform makes no assumptions about the type of this field and encodes it internally into a byte array for transport and storage. This field is encrypted at rest in common data environments such as on the Red Sift servers. |

epoch | An optional UTC Unix timestamp (i.e. the number of seconds elapsed since January 1, 1970 UTC) and represents the date associated with this event. Where possible, transformations should attempt to preserve this value so the platform can reason about the temporal dimension associated with collections of events. This may not be possible if for example events are aggregated together or transformed in some way in which case this field should be nulled out or zeroed. |

Keys

Keys themselves are not encrypted and when creating your own keys, take care when designing your Sift so as not to accidentally expose PII in this field.

key and ‘key$schema’ field limitations

- only UTF-8 strings

- slash

/separator of hierarchy- up to 7 levels in a hierarchy

'value' field

This field is encoded as a byte array internally and is accessible in the respective node implementation like so:

Javascript: Buffer

Python 2.7: str

Python 3: bytes

Java, Scala, Clojure: byte[]

Swift: Data?

Go: []byteYou will then need to convert this back to the right representation you need.

BucketedData

In most instances, data is wrapped as BucketedData which adds one or multiple of the following fields to the data:

| Field | Description |

|---|---|

bucket | Bucket is simply a string address but is the mechanism used to identify where data came from or where it should go to. Bucket names should not contain leading or trailing whitespace and bucket names cannot start with an _ or include %, {, } or :. |

generation | optional, readonly - some data sources such as Stores can keep track of how many times a key has been written. If available, this will be served up to your Sift. You may treat this as an atomic increment and use it to multiplex data if you expect events to repeat with the same key but wish to do work on them individually. |

twoI | optional, write-only - when configuring your DAG on the server, you may set output ports to write secondary indices. Your frontend code can use these values for additional functionality, e.g segmentation in the data UI. |

Node outputs

An implementation can return nothing, i.e. undefined or null in Javascript, if it wishes to do nothing with the data.

If it returns any data at all, a minimum requirement for an implementation is to return a dictionary with a key field. If no value is provided for this key, the value is assumed to be empty. The third field for a key is the name of the store it is meant to be stored in.

"datum": {

"name" : "bucket1", //name of bucket

"key": "key1", // only string

"value" : {}, // string OR {} OR [] OR Byte array

"epoch" : 1457976598 // optional

}

Allowed outputs for a node:

| Type | Description |

|---|---|

| null value | null or undefined in JavaScript, Java, Scala, None in Python, nil in Clojure, etc. |

| dictionary | A dictionary (object in JavaScript/JSON) with name, key, value and an optional epoch (check the example column), value should be one of:a JSON serialisable object ( object or array)a string * a byte array ( Buffer in JavaScript, bytes in Python, byte[] in Java Scala Clojure, etc.)In Java, Scala and Clojure the ComputeResponse object should be used. |

| array | An array containing the above mentioned dictionary (in Java, Scala, Clojure ComputeResponse[] or List<ComputeResponse>) |

Javascript & Promises

A node implemented in JavaScript can also return a Promise or an array of them but each Promise should resolve to the dictionary mentioned above.

'name' field

If more than one store is defined as an output for a node, the

nameproperty is required to avoid errors. If there is only one store it can be omitted although it is recommended to always add it for completeness.

Node outputs for Thread and Thread List Views

An implementation can emit to the special buckets _email.id and _email.tid the email or thread specific data that it needs the same way we have described until now. The only difference is when we want to target data towards the email-thread or the thread-list view, then we need to output data under the detail or the list attribute respectively.

- All the data under the

detailattribute will become available in the Sift Controller under thestate.params.detailkey as described in Sift Controller - All the data under the

listattribute will become available in thelistInfoargument as described in Email Client Controller

datum = {

"key": "key1",

"value": {

"detail": {},

"list": {}

}

}

Environment Variables

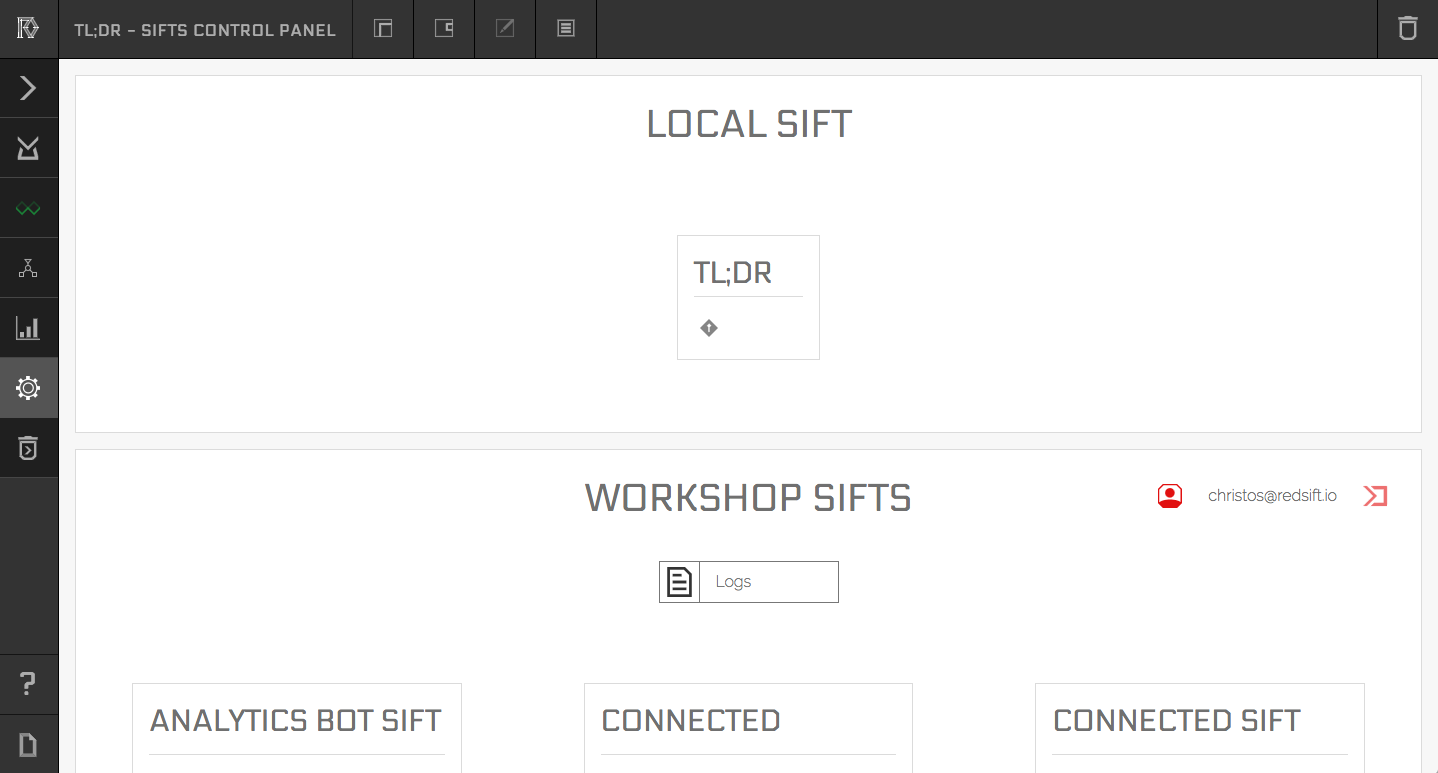

Things like secrets and configuration options can be passed to the node implementations through the usage of environment variables. Those can be defined through the Sift Control Panel in the SDK, the cog icon on the left, which looks like this:

Workshop Sifts

For everything that's relevant for Sifts running in the Workshop we will have to authenticate you first. e.g. The screen above is after I authenticated with my account.

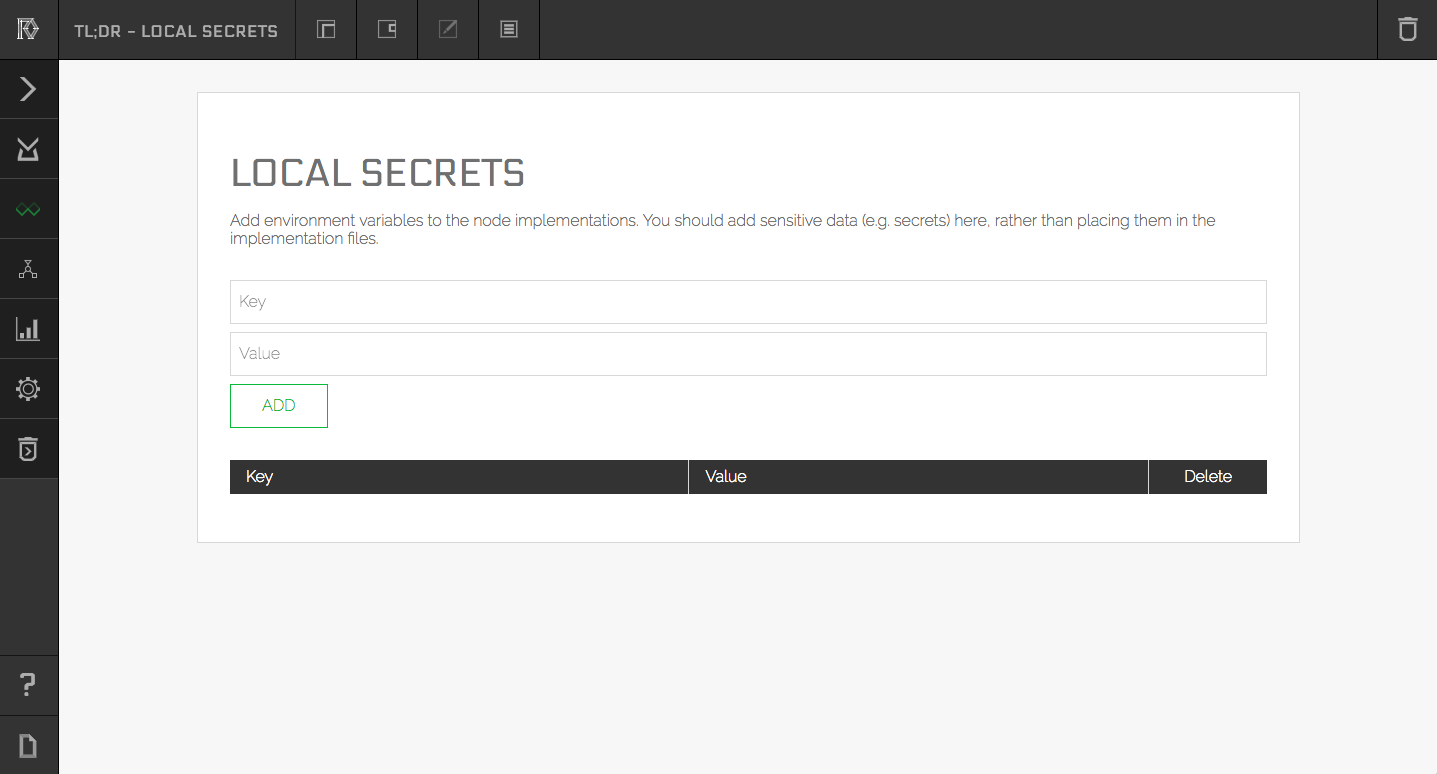

First section is for the Sift you are developing locally in this case the TL;DR, next section is for the Sifts you have deployed in your Workshop. You can access the secrets menu through the keyhole icon on the relevant Sift tile. After clicking it you should see a screen similar to this:

Here you can add all the environment variables you would like to pass to your nodes and then in the implementation of a node you can access them with the standard way your language provides for accessing environment variables. e.g. in Javascript all of them would be under process.env

Naming restriction

Variable names can not start with an underscore

_the character is reserved for internal use.

Environment variables and Workshop Sifts

Variables that you define for Workshop Sifts are branch specific. So for example variables defined on the master branch will not be available on the development branch.

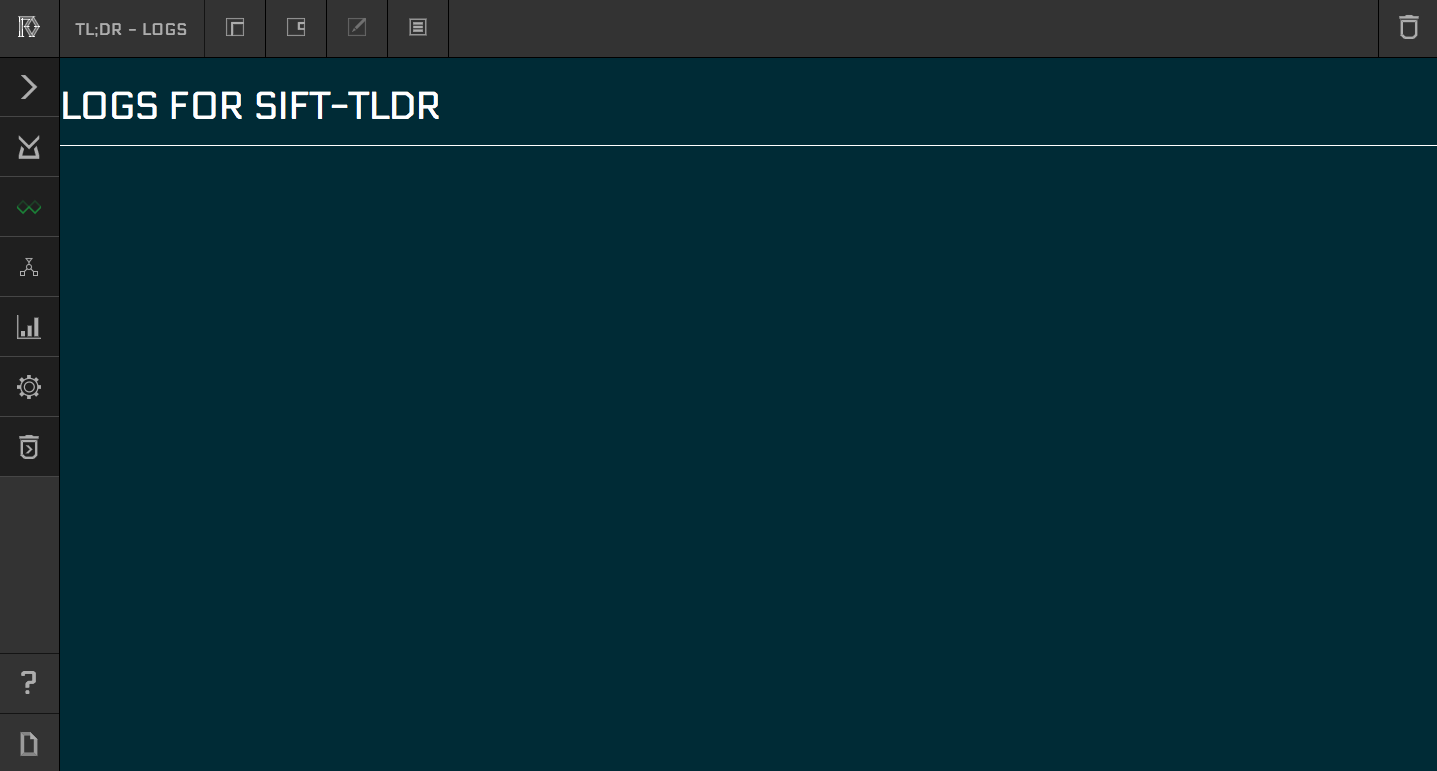

Remote Logs

When a Sift is running on our cloud you can subscribe on the logs that it produces via the Sift Control Panel in our SDK. Just head to the Control Panel the same way we described in the section above and look for the icon that looks like a sheet of paper in the relevant Sift tile. It's next to the keyhole one you can not miss it! After clicking it you should see a screen like this:

Logs and timing

You need to navigate to this page (subscribe for logs) before actually doing anything on our cloud (like installing the sift) otherwise you will miss them.

Updated over 5 years ago